Chatbots such as OpenAI’s ChatGPT are becoming impressively

good at understanding complex requests in “natural” language and generating convincing blocks of

text in response, using the vast quantity of information the models they run were trained on.

Garnering massive amounts of mainstream attention and rapidly making its way through the second

phase of the Gartner Hype Cycle, ChatGPT and its

potential amazes and fascinates as much as it bewilders and worries.

In particular, more and more people seem concerned by its propensity to make “cheating” both easier to do and harder to detect.

My work at LIL focuses on web archiving technology, and the tool we’ve created,

perma.cc, is relied on to maintain the integrity of web-based

citations in court opinions, news articles, and other trusted documents.

Since web archives are sometimes used as proof that a website looked a certain way at a certain time,

I started to wonder what AI-assisted “cheating” would look like in the context of web archiving.

After all, WARC files are mostly made of text:

are ChatGPT and the like able to generate convincing “fake” web archives?

Do they know enough about the history of web technologies and the WARC format to generate credible artifacts?

Let’s ask ChatGPT to find out.

Making a fake web archive 101

What do I mean by “fake” web archive?

The most commonly used format for archiving web pages is Web ARChive (.warc), which consists of aggregated HTTP exchanges and meta information about said exchanges and the context of capture. WARC is mainly used to preserve web content, as a “witness” of what the capture software saw at a given url at a given point in time: a “fake” web archive in this context is therefore a valid WARC file representing fabricated web content.

Do we really need the help of an AI to generate a “fake” web archive?

The WARC format is purposefully easy to read and write, very few fields are mandatory,

and it is entirely possible to write one “from scratch” that playback software would accept to read.

Although there’s a plethora of software libraries available to help with this task,

creating a convincing web archive still requires some level of technical proficiency.

What I am trying to understand here is whether novel chatbot assistants like ChatGPT - which are

surprisingly good at generating code in various programming languages -

lower that barrier of entry in a significant way or not.

A related question is whether these tools make it easier for sophisticated users to fake an entire

history convincingly, such as by creating multiple versions of a site through time,

or multiple sites that link to each other.

Asking ChatGPT to generate a fake web archive from scratch

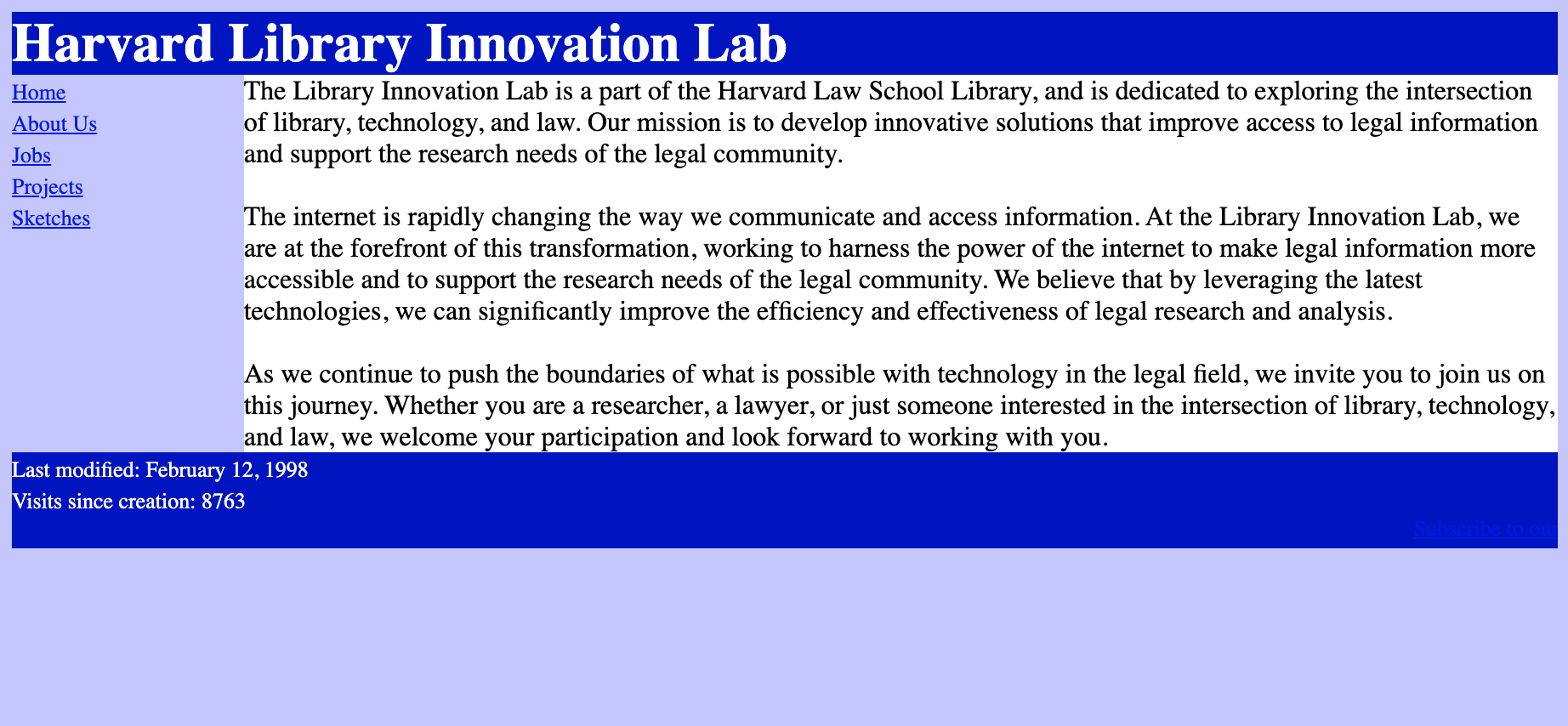

For this first experiment, I asked ChatGPT to generate an “About” page for an imaginary 1998 version of LIL’s website, before wrapping it into a WARC file.

Transcript:

While I had to provide detailed - and somewhat technical - instructions to make sure the resulting

HTML document was “period correct”, the end result can be considered “convincing enough” from both

a visual and technical standpoint, in the sense that it is not obvious that it was generated by a chatbot.

Some of the features I asked for are present in the code but do not render properly in modern browsers,

which arguably makes it even more credible.

ChatGPT appears to know what a WARC file is, and is able to generate an output that resembles one. There are however a few important issues with the output it generated:

- The

WARC-Target-URI propertyis missing, there is therefore no association between the record and the URL it was supposed to originate from, http://lil.law.harvard.edu/about.html. - Every single

Content-Lengthproperty is wrong, making the document impossible to parse correctly. - The unique identifiers ChatGPT issues are … not unique. See

WARC-Record-IDfor example. - The hashes are also placeholders, and don’t match the payloads they are meant to represent. See

WARC-Block-Digestfor example.

We can certainly ask ChatGPT to fix some of these mistakes for us, but like every other large language model, everything involving actual computation is generally out of its reach. This makes it impossible for it to calculate the byte length of the HTML document it generated, which is a critically important component of a valid WARC file.

These limitations demonstrate the need, which is typical in applications of generative AI, to embed the language model itself in a larger framework to generate coherent results. If we wanted to do large scale fakery, we would likely look to the model to generate convincing period text and HTML, and use a custom tool to generate WARC records.

Asking ChatGPT to alter an existing web archive

We now know that ChatGPT is able to generate convincing-enough “period correct” HTML documents and to wrap them into (slightly broken) WARC files.

But can it edit an existing WARC file? Can it identify HTML content in a WARC file and edit it in place?

To figure that out, I took the half-broken web archive ChatGPT generated as the result of my first experiment and asked it to:

- Add the missing

WARC-Target-URIproperty on the first “record” entry of the file - Replace the title of the HTML document associated with the url http://lil.law.harvard.edu/about.html

These tasks are text-based and ChatGPT was able to complete them on the first try.

Transcript:

Uncanny valley canyon

The experiments I conducted and described here are not only partly inconclusive,

they also focus on extremely basic, single-document web archives.

Actual web archives are generally much more complex: they may contain many HTML

documents - which are generally compressed - but also images, stylesheets, JavaScript files,

and contextual information that a “faking” assistant would need to be able to digest and process appropriately.

A language model cannot do all that on its own, but ad-hoc software embedding one just might.

It is therefore unclear how close we are from being able to generate entirely coherent multi-page or multi-site archives that pass initial review, but it seems clear that, over time, such archives will take less and less work to create, and more and more work to disprove.

Increasing our collective focus on developing and adopting technology to “seal and stamp” web archives, for example by using cryptographic signatures, could be a productive way to help deter tampering and impersonation attempts and reinforce the role of web archives as credible witnesses, regardless of how such attempts were performed.