At LIL, we’ve been providing users with the ability to preserve online sources via Perma.cc since 2013. Running a digital archive puts us in the “forever business”–what’s online today may be gone tomorrow, but that Perma Link you saved should never expire. Promising to host something forever brings with it different challenges than hosting something for a month or a year. There are the technical burdens: How will we guarantee these links stay accessible even as the underlying technologies continue to develop? There are logistical concerns: Where will we put all these files? There’s also a question of cost: Just how much does it cost to store a file forever?

Read more of "The Cost of a Digital Archive"Welcoming Fellow Katy Gero

The LIL team is excited to welcome Katy Gero, who joins us to investigate ethical language models for creative writing. Katy is a post-doc at the Variation Lab at Harvard SEAS, which is led by friend of LIL Elena Glassman.

Read more of "Welcoming Fellow Katy Gero"LLMs are universal translators: on building my own translation tools for a foreign language conference

Here is a picture of the Statue of Liberty doing a TikTok dance, as painted by van Gogh, as interpreted by ChatGPT. This is very relevant to my point and we’ll come back to it.

One of the best ways to think about large language models is as universal, personal translators. When I gave a talk at a Spanish-language library conference in Argentina recently, it was an excellent chance to test what LLMs currently offer as translators and what they might become. The answer made me optimistic for how LLMs can work as humanistic knowledge tools, in concert with library values.

Read more of "LLMs are universal translators: on building my own translation tools for a foreign language conference"Conference talk: disruptive innovation in libraries

This piece is adapted from a keynote talk I gave at Innovación y Experiencia del Usuario at Universidad Católica de Argentina on November 1, 2023.

I was asked to give a talk on the subject of “disruptive innovation in libraries,” which isn’t necessarily the phrase I would choose to describe our work, but I enjoyed using that lens to explore the changes all libraries are going through.

If you want to skip around, Part 1 explores the disruptive changes libraries are experiencing with the arrival of the internet over the last forty years; Part 2 proposes a new mission for libraries in reweaving cultural memory for the internet age; and Part 3 outlines what I’ve learned so far about leading “disruptive innovation” within large, established institutions.

When I think about disruptive innovation in libraries I think about two stories.

Read more of "Conference talk: disruptive innovation in libraries"AI Book Bans: Testing LLMs Against the Freedom to Read

What happens when large language models are asked to provide justifications for book bans? Do the same built-in guardrails that prevent them from generating pipe-bomb recipes kick in, or do models do their best to comply with the user’s request? How do models go about providing such justifications while navigating their “knowledge” of library principles? And what could this all mean for the future of our freedom to read?

AI is promising to transform the way we navigate our increasingly complex world by augmenting our capacity to access and process information. The widely-reported case of the Iowa School district which — under pressure and a tight compliance deadline from new state legislation — relied on ChatGPT’s answers to decide which books to remove from its library collections is a manifestation of a deeper, tectonic and sometimes ill-informed shift in our relationship to knowledge that this AI moment is driving.

While the perceived affordances of AI can be alluring, it also carries inherent risks. These recent developments have inspired, if not alarmed us, prompting an experimental study to address some of these increasingly pressing questions, and to advocate for the emergence of a “Librarianship of AI”, emphasizing the necessity of testing, documenting and reporting on the behavior of collections of models, guided by library principles.

Read more of "AI Book Bans: Testing LLMs Against the Freedom to Read"Mysterious Search Algorithms

Lawyers use search algorithms on a daily or even hourly basis, but the way they work often remains mysterious. Users receive pages and pages of results from searches that ostensibly are based on some relevancy standard, seemingly guaranteeing that the most important results are all found. But that may not always be the case. This post explores the mystery of search algorithms from a legal research perspective. It examines what is wrong with algorithms being mysterious, explores our current knowledge of how they work, and makes recommendations for the future.

Read more of "Mysterious Search Algorithms"“Did ChatGPT really say that?”: Provenance in the age of Generative AI.

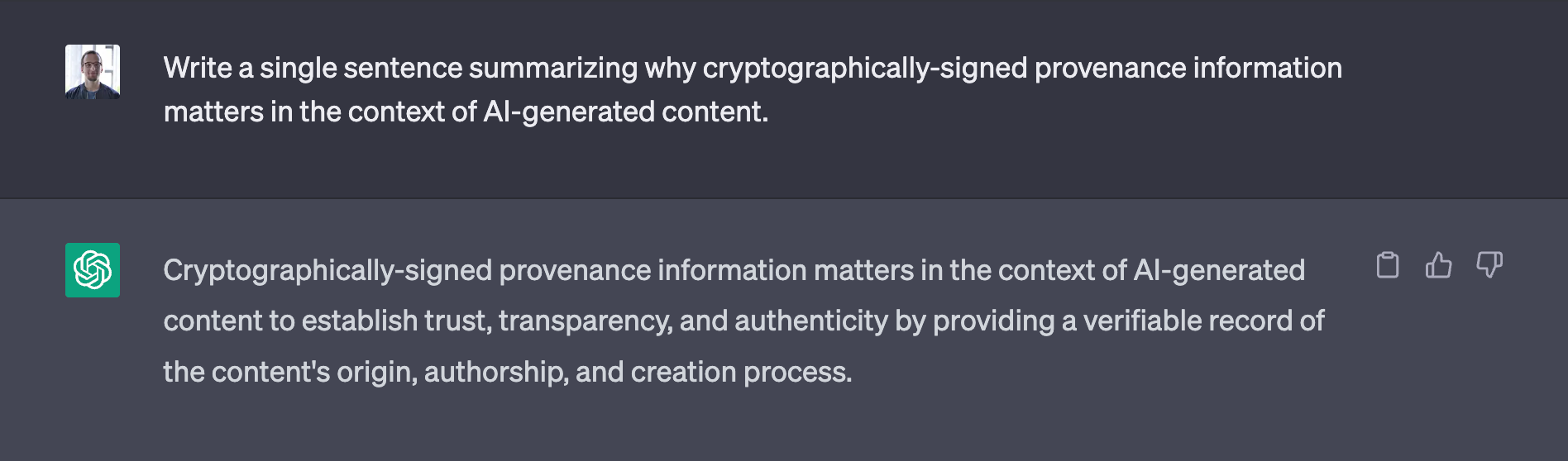

Prompt: “Write a single sentence summarizing why cryptographically-signed provenance information matters in the context of AI-generated content.”

ChatGPT: “Cryptographically-signed provenance information matters in the context of AI-generated content to establish trust, transparency, and authenticity by providing a verifiable record of the content’s origin, authorship, and creation process.”

That’s a great point, but did ChatGPT really say that, or did I make that up to trick you into reading this article? I could show you a screenshot of that exchange to try to convince you …

… but what good would it do, when it’s so easy to produce convincing fakes?

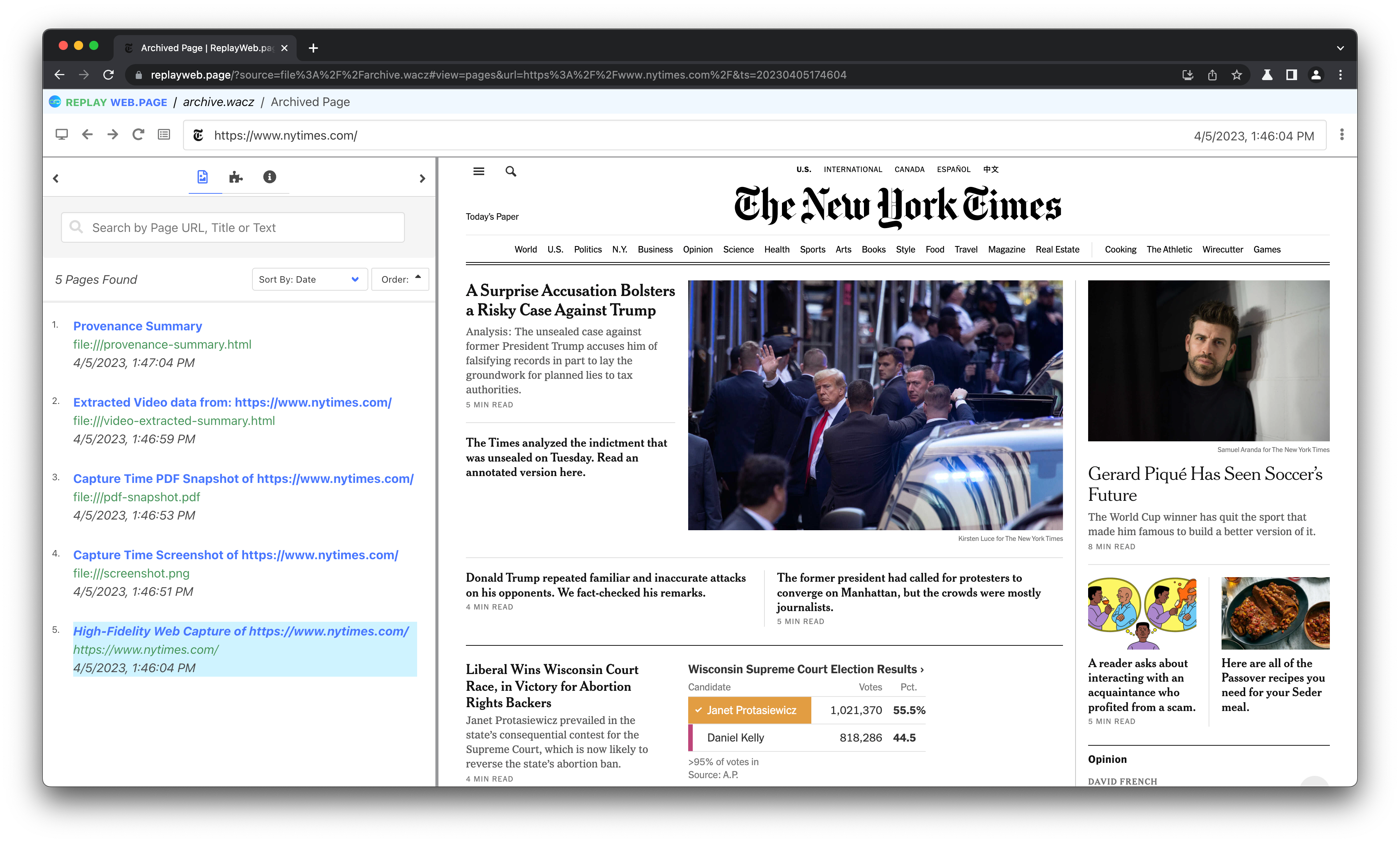

Read more of ""Did ChatGPT really say that?": Provenance in the age of Generative AI."Witnessing the web is hard: Why and how we built the Scoop web archiving capture engine 🍨

There is no one-size-fits-all when it comes to web archiving techniques, and the variety of tools and services available to capture web content illustrate the wide, ever-growing set of needs in the web archiving community. As these needs evolve, so do the web and the underlying challenges and opportunities that capturing it presents. Our decade of experience running Perma.cc has given our team a vantage point to identify emerging challenges in witnessing the web that we believe extend well beyond our core mission of preserving citations in the legal record. In an effort to expand the utility of our own service and contribute to the wider array of core tools in the web archiving community, we’ve been working on a handful of Perma Tools.

In this blog post, we’ll go over the driving principles and architectural decisions we’ve made while designing the first major release from this series: Scoop, a high-fidelity, browser-based, single-page web archiving capture engine for witnessing the web. As with many of these tools, Scoop is built for general use but represents our particular stance, cultivated while working with legal scholars, US courts, and journalists to preserve their citations. Namely, we prioritize their needs for specificity, accuracy, and security. These are qualities we believe are important to a wide range of people interested in standing up their own web archiving system. As such, Scoop is an open-source project which can be deployed as a standalone building block, hopefully lowering a barrier to entry for web archiving.

Introducing Reading Mode for H2O

We are excited to announce the release of our “reading mode” - a new casebook view that offers students a cohesive digital format to facilitate deep reading.

We think better design of digital reading environments can capture the benefits of dynamic online books while orienting readers to an experience that encourages deeper analysis. Pairing that vision with our finding that more students are seeking digital reading options, we identified an opportunity to develop a digital reading experience that is streamlined, centralized, and most likely to encourage deep reading.

Read more of "Introducing Reading Mode for H2O"IIPC Technical Speaker Series: Archiving Twitter

I was invited by the International Internet Preservation Consortium (IIPC) to give a webinar on the topic of “Archiving Twitter” on January 12.

During this talk, I presented what we’ve learned building thread-keeper, the experimental open-source software behind social.perma.cc which allows for making high-fidelity captures of twitter.com urls as “sealed” PDFs.

Read more of "IIPC Technical Speaker Series: Archiving Twitter"